Cross-dataset emotion recognition from facial expressions through convolutional neural networks

Abstract

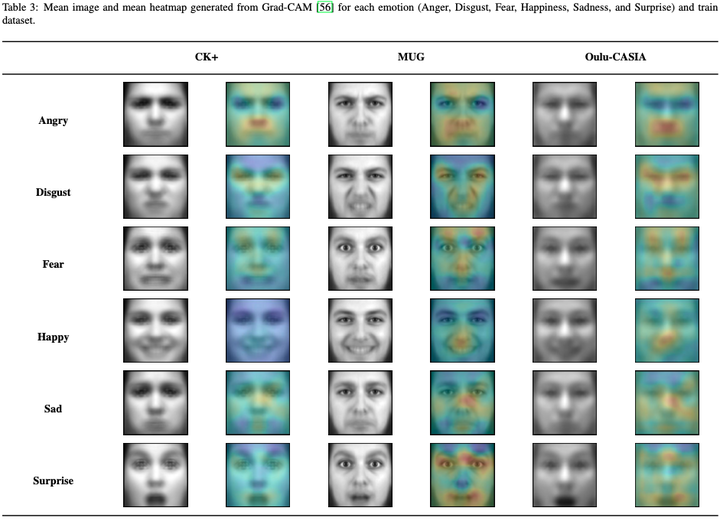

The face is the window to the soul. This is what the 19th-century French doctor Duchenne de Boulogne thought. Using electric shocks to stimulate muscular contractions and induce bizarre-looking expressions, he wanted to understand how muscles produce facial expressions and reveal the most hidden human emotions. Two centuries later, this research field remains very active. We see automatic systems for recognizing emotion and facial expression being applied in medicine, security and surveillance systems , advertising and marketing, among others. However, there are still fundamental questions that scientists are trying to answer when analyzing a person’s emotional state from their facial expressions. Is it possible to reliably infer someone’s internal state based only on their facial muscles’ movements? Is there a universal facial setting to express basic emotions such as anger, disgust, fear, happiness, sadness, and surprise? In this research, we seek to address some of these questions through convolutional neural networks. Unlike most studies in the prior art, we are particularly interested in examining whether characteristics learned from one group of people can be generalized to predict another’s emotions successfully. In this sense, we adopt a cross-dataset evaluation protocol to assess the performance of the proposed methods. Our baseline is a custom-tailored model initially used in face recognition to categorize emotion. By applying data visualization techniques, we improve our baseline model, deriving two other methods. The first method aims to direct the network’s attention to regions of the face considered important in the literature but ignored by the baseline model, using patches to hide random parts of the facial image so that the network can learn discriminative characteristics in different regions. The second method explores a loss function that generates data representations in high-dimensional spaces so that examples of the same emotion class are close and examples of different classes are distant. Finally, we investigate the complementarity between these two methods, proposing a late-fusion technique that combines their outputs through the multiplication of probabilities. We compare our results to an extensive list of works evaluated in the same adopted datasets. In all of them, when compared to works that followed an intra-dataset protocol, our methods present competitive numbers. Under a cross-dataset protocol, we achieve state-of-the-art results, outperforming even commercial off-the-shelf solutions from well-known tech companies.

BibTeX

@article{dias21cross,

author = {William Dias and Fernanda A. Andaló and Rafael Padilha and Gabriel Bertocco and Waldir Almeida and Paula Costa and Anderson Rocha},

title = {Cross-dataset emotion recognition from facial expressions through convolutional neural networks},

journal = {Journal of Visual Communication and Image Representation},

volume = {In Press},

pages = {},

year = {2021},

doi = {https://doi.org/10.1016/j.jvcir.2021.103395},

}